Build Advance

Analytics Engine

Around Azure

Cloud Services

Why & How To Build A Microsoft Big Data Solution

We can build big data solutions simply as an experimental platform for investigating data, or we can build a more comprehensive solution that integrates with your existing data management and BI systems. While there is no formal set of steps for designing and implementing big data solutions, there are several methodologies that we would consider using our industry experience. Ensuring that you think about these solutions will help you to more quickly achieve the results you require, and can save considerable waste of time and effort with competitive pricing.

Problem Statement

Rapid increase of digitized information has proliferated a data deluge, the data comes from multiple sources such as cloud services, telemetry, call centers, embedded systems, devices & solutions. It is extremely harder to analyze Unstructured data. For meaningful decisions, Organization has to study Unstructured along with Structured data. Real time insights from input files rather waiting too long from Structured Data.

Gartner Research Findings

As per Gartner, 80% of organization data is Unstructured 8% of global companies are using big data analytics so far. By 2017 , 25% of global companies will adopt big data analytics for at least one use case for security & fraud detection

Four Steps To Start Your Big Data Solution

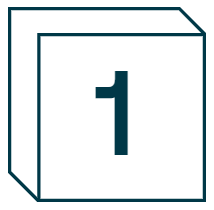

Big Data Architecture

Big data is not a stand-alone technology, or just new type of data querying mechanism. It is a significant part of the Microsoft Business Intelligence (BI) and Analytics product range, and a vital component of the Microsoft data platform.

Microsoft and Hortonworks offer three distinct solutions based on HDP:

- HDInsight. This is a cloud-hosted service available to Azure subscribers that uses Azure clusters to run HDP, and integrates with Azure storage

- Hortonworks Data Platform (HDP) for Windows. This is a complete package that you can install on Windows Server to build your own fully-configurable big data clusters based on Hadoop. It can be installed on physical on-premises hardware, or in virtual machines in the cloud

- Microsoft Analytics Platform System. This is a combination of the massively parallel processing (MPP) engine in Microsoft Parallel Data Warehouse (PDW) with Hadoop-based big data technologies. It uses the HDP to provide an on-premises solution that contains a region for Hadoop-based processing, together with PolyBase—a connectivity mechanism that integrates the MPP engine with HDP, Cloudera, and remote Hadoop-based services such as HDInsight. It allows data in Hadoop to be queried and combined with on-premises relational data, and data to be moved into and out of Hadoop.

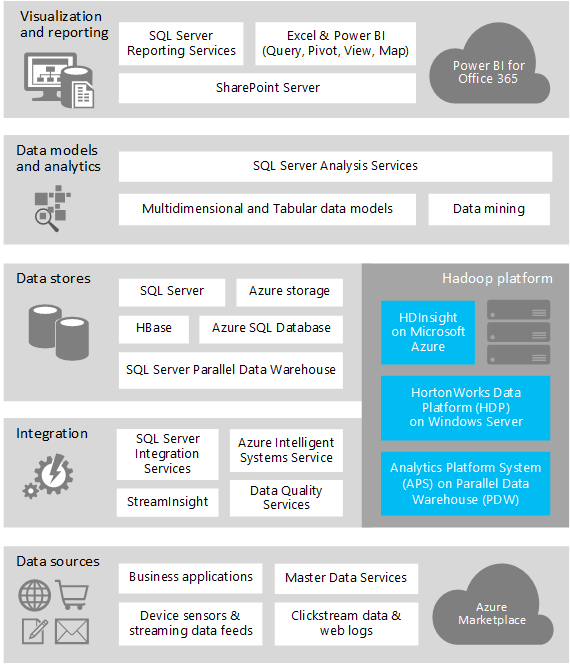

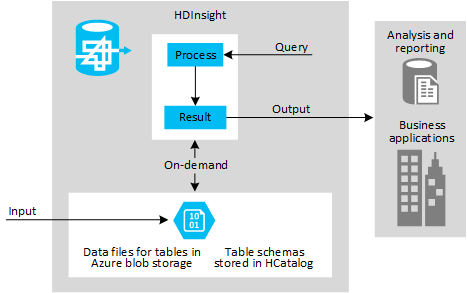

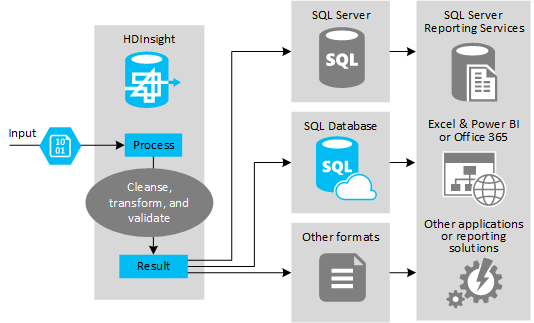

Model Use Cases

Hadoop-based big data systems such as HDInsight allow you to store both the source data and the results of queries executed over this data. You can also store schemas (or, to be precise, metadata) for tables that are populated by the queries you execute. These tables can be indexed, although there is no formal mechanism for managing key-based relationships between them. However, you can create data repositories that are robust and reasonably low cost to maintain, which is especially useful if you need to store and manage huge volumes of data.

Hadoop-based big data systems such as HDInsight can be used to extract and transform data before you load it into your existing databases or data visualization tools. Such solutions are well suited to performing categorization and normalization of data, and for extracting summary results to remove duplication and redundancy. This is typically referred to as an Extract, Transform, and Load (ETL) process.

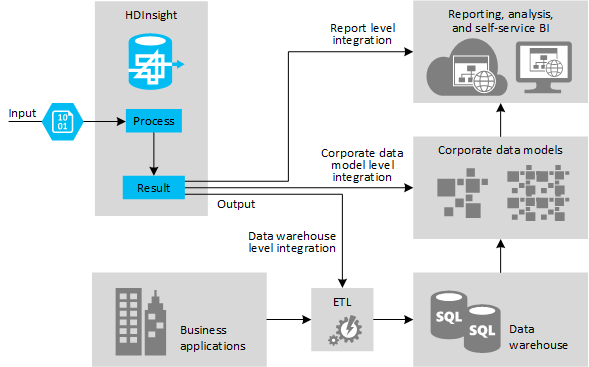

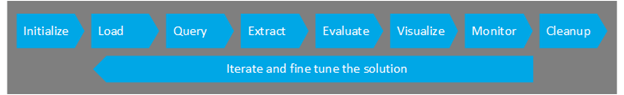

End-to-End Big Data Solutions

This section is divided into convenient areas that make it easier to understand the challenges, options, solutions, and considerations for each stage. It describes and demonstrates the individual tasks that are part of typical end-to-end big data solutions.

- Obtaining the data and submitting it to the cluster. During this stage we decide how we will collect the data we have identified as the source, and how we will get it into our big data solution for processing. Often we will store the data in its raw format to avoid losing any useful contextual information it contains, though we may choose to do some pre-processing before storing it to remove duplication or to simplify it in some other way. we must also make several decisions about how and when we will initialize a cluster and the associated storage.

- Processing the data. After we have started to collect and store the data, the next stage is to develop the processing solutions we will use to extract the information we need. While we can usually use Hive and Pig queries for even quite complex data extraction, we will occasionally need to create map/reduce components to perform more complex queries against the data.

- Visualizing and analyzing the results. Once you are satisfied that the solution is working correctly and efficiently, we can plan and implement the analysis and visualization approach that you require. This may be loading the data directly into an application such as Microsoft Excel, or exporting it into a database or enterprise BI system for further analysis, reporting, charting, and more.

Building an automated end-to-end solution. At this point it will become clear whether the solution should become part of customer organization’s business management infrastructure, complementing the other sources of information that we use to plan and monitor business performance and strategy.

If this is the case we recommend automate and manage some or all of the solution to provide predictable behaviour, and perhaps so that it is executed on a schedule.

Advanced Analytics

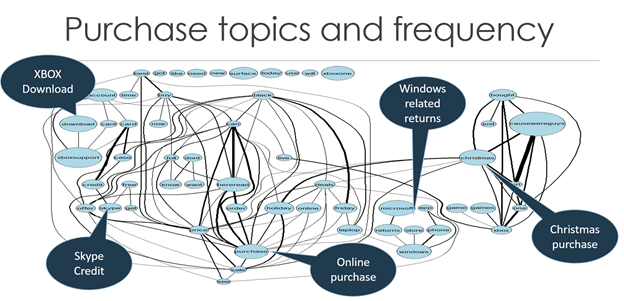

Advanced analytics provides algorithms for complex analysis of either structured or unstructured data. It includes sophisticated statistical models, machine learning, neural networks, text analytics, and other advanced data mining techniques. Among its many use cases, it can be deployed to find patterns in data, prediction, optimization, forecasting, and for complex event processing/analysis. Examples include predicting churn, identifying fraud, market basket analysis, or understanding website behavior. Advanced analytics does not include database query and reporting and OLAP cubes.

We have built Onsite & Offshore capabilities with dedicated data scientists team, exclusively built BI Research and Development lab to just build Advanced Analytics solutions, please see below our case studies for your reference.

Case Studies